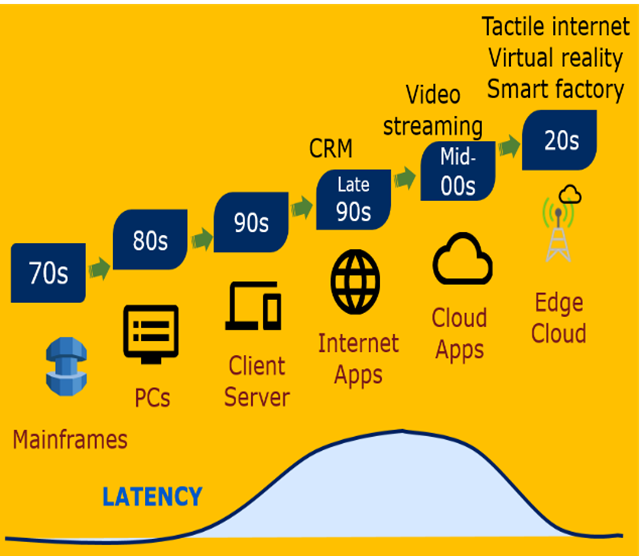

New application needs have always sparked human innovation. A decade ago, cloud computing enabled high-value enterprise services with a global reach and scale, but with several minutes or seconds of delay. Today, we stream on-demand and time-shifted HD or 4K video from the cloud with delays of hundreds of milliseconds. In the future, the need for increased efficiency and reduced latency between measurement and action will drive the development of real-time methods for feature extraction, computation and machine learning on streaming data.

Our focus is on enabling applications to make efficient use of limited computing resources in proximity to users and sensors (rather than resources in the cloud) for AI processing like feature extraction, inferencing and periodic re-training of tiny, dynamic, contextualized AI models. Such edge-cloud processing will avoid incurring 100+-millisecond delays to the cloud and ensure personal privacy of stream data used for training. But it won’t be easy to develop. Barriers include the high programming complexity of efficiently using tiers of limited computing resources (in smart devices, edge and the cloud), high processing delays due to limited edge resources and just-in-time adaptations to dynamic environments (changes in the content of data streams, number of users or ambient conditions).

Publication Tags: stream processing