In the rapidly evolving landscape of artificial intelligence, the development of agentic large language models (LLMs) marks a significant milestone. Our project, Agentic LLMs for AI Orchestration, is at the forefront of this innovation. We have engineered an advanced LLM capable of solving complex workflows by integrating computer vision, logic, and compute modules. By leveraging natural language task specifications, our LLM dynamically generates plans to execute tasks using available tools, translating these plans into Python programs that can be seamlessly synthesized and deployed. This adaptability is underpinned by our LLM’s ability to quickly assimilate new tools based on their documentation and code, a feature that sets it apart in the AI orchestration domain.

“Integrating various modules such as computer vision and logic within the LLM framework allows for a more holistic approach to solving complex tasks. The ability to generate and deploy Python programs based on natural language specifications is a game-changer in the field of AI orchestration,” said Vijay Kumar B G, a Media Analytics department team member.

“Integrating various modules such as computer vision and logic within the LLM framework allows for a more holistic approach to solving complex tasks. The ability to generate and deploy Python programs based on natural language specifications is a game-changer in the field of AI orchestration,” said Vijay Kumar B G, a Media Analytics department team member.

Project Overview

Our agentic LLM is designed to understand and execute complex workflows by employing a combination of cutting-edge techniques. The natural language task specification is the starting point, guiding the LLM to generate a coherent plan. This plan is then represented as a Python program, which is synthesized to deploy the necessary tools programmatically. The adaptability of our planner is one of its most significant strengths, allowing it to incorporate new tools efficiently by interpreting available documentation and code. This capability ensures that our LLM remains versatile and scalable, ready to tackle a wide range of tasks.

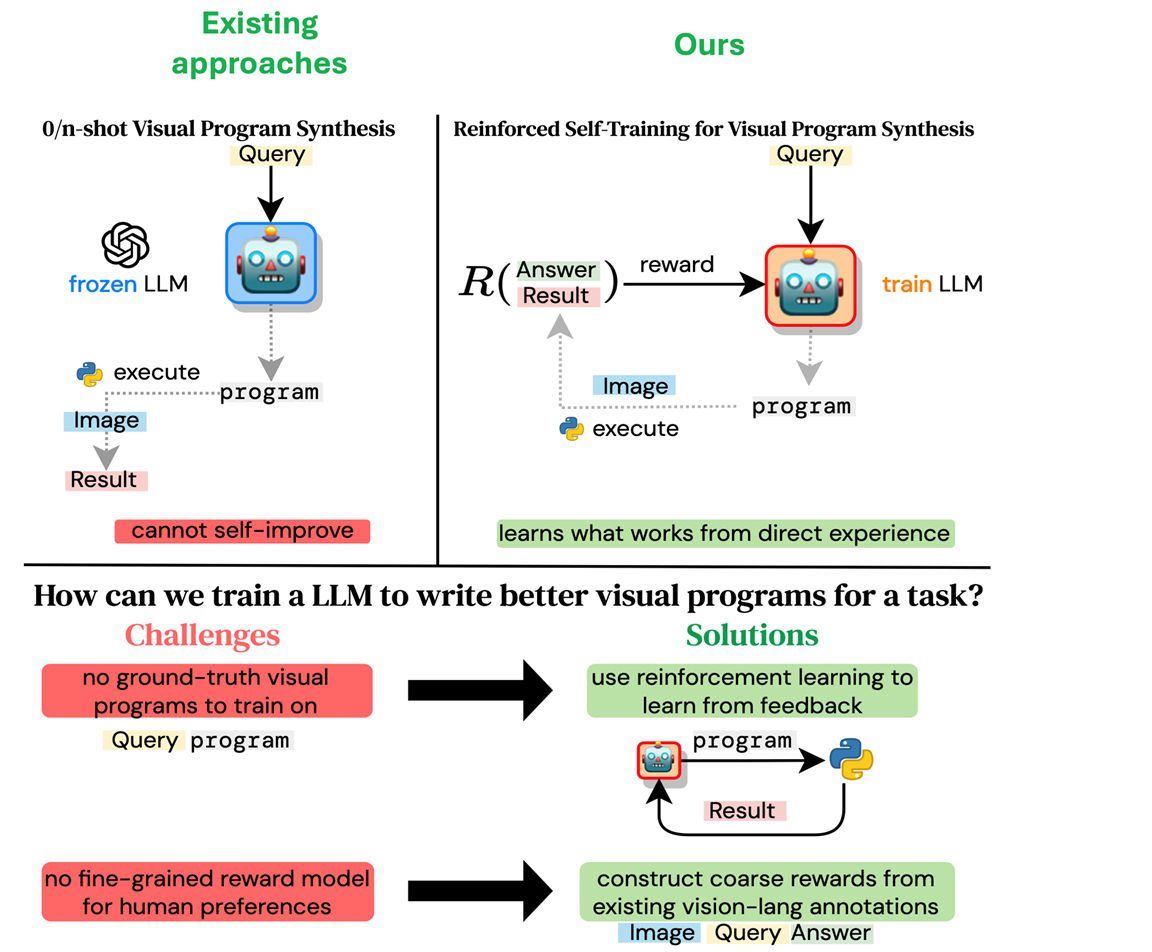

Our training methodology is innovative and efficient. By utilizing reinforced self-training with weak supervision, our LLM can learn and adapt quickly, with the added option of incorporating human feedback to refine its performance further. This approach not only enhances training efficiency but also ensures that our LLM outperforms its competitors on benchmark visual reasoning tasks despite using fewer parameters.

Media Analytics department team member Samuel Schulter adds, “Our focus on reinforced self-training with weak supervision has been pivotal. It enables the LLM to adapt quickly and efficiently with minimal human intervention. This not only streamlines the workflow but also sets a new benchmark in AI performance.”

Media Analytics department team member Samuel Schulter adds, “Our focus on reinforced self-training with weak supervision has been pivotal. It enables the LLM to adapt quickly and efficiently with minimal human intervention. This not only streamlines the workflow but also sets a new benchmark in AI performance.”

Our project is supported by four foundational research papers, each contributing critical insights and advancements to developing our agentic LLM.

- Self-Training Large Language Models for Improved Visual Program Synthesis With Visual Reinforcement: This paper explores visual program synthesis to leverage the reasoning capabilities of large language models for compositional computer vision tasks. The emphasis on training LLMs to write better visual programs highlights the potential for significant improvements in AI orchestration.

- Exploring Question Decomposition for Zero-Shot VQA: This paper investigates a question decomposition strategy for visual question answering (VQA) to address the limitations of treating VQA as a single-step task. The findings underscore the importance of nuanced question-answering strategies, integral to our LLM’s ability to handle complex workflows.

- Q: How to Specialize Large Vision-Language Models to Data-Scarce VQA Tasks? A: Self-Train on Unlabeled Images: This research focuses on finetuning large vision-language models on specialized datasets, particularly in scenarios where data is scarce. The self-training approach on unlabeled images is particularly relevant to our project, as it informs our methodology for training LLMs under constrained conditions.

- Single-Stream Multi-level Alignment for Vision-Language Pretraining: This paper highlights the effectiveness of self-supervised vision-language pretraining and discusses the limitations of dual-stream architectures and the benefits of fine-grained alignment. These insights are crucial for optimizing our LLM’s performance by integrating visual and language components.

Conclusion

The development of Agentic LLMs for AI Orchestration represents a significant advancement in artificial intelligence. By seamlessly integrating computer vision, logic, and compute modules, our LLM is poised to revolutionize the way complex workflows are managed and executed. Supported by robust research and driven by innovative training methodologies, our agentic LLM sets a new standard in AI orchestration, offering unparalleled performance and adaptability.