Today, I want to delve into something that has been on my mind lately: the development of disciplines. By “disciplines,” I mean areas of exploration like chemistry, physics, engineering, materials science, biology, sociology, and economics—essentially any field of study.

The Experimental Beginnings

Most disciplines begin their journey in an experimental phase, long before they are formally recognized as distinct fields. Early practitioners are driven by curiosity, engaging in hands-on experimentation that gradually leads to replicable outcomes. Consider the early chemists who mixed chemicals to observe reactions, often without understanding the underlying principles.

As these fields attract more attention and funding, the complexity and cost of experiments increase. Success breeds complexity, leading to an explosion of experimental possibilities. This rapid expansion is exciting but eventually reaches a point where the sheer scale of experiments becomes a bottleneck.

The Shift to Theoretical Frameworks

When the cost and effort required to advance knowledge become prohibitive, disciplines must evolve. They develop theoretical frameworks that provide a structured way to understand and predict outcomes without constant experimentation. This shift is crucial—it allows for better resource allocation and helps target key experiments that can significantly advance the field.

When the cost and effort required to advance knowledge become prohibitive, disciplines must evolve. They develop theoretical frameworks that provide a structured way to understand and predict outcomes without constant experimentation. This shift is crucial—it allows for better resource allocation and helps target key experiments that can significantly advance the field.

For instance, in chemistry, the move from simple mixtures to analytic chemistry marked a significant evolution. The field further transformed with the advancement of theoretical chemistry, incorporating mathematical frameworks like statistical mechanics and quantum mechanics. These frameworks allowed for more strategic, less resource-intensive experiments that expanded our understanding of chemical properties and reactions.

Contemporary Challenges in AI and Machine Learning

Contrast this with the current state of machine learning and AI, which we often consider highly theoretical. In reality, much of AI’s development has been experimental, not driven by theoretical understanding but by trial and error. Techniques like convolutional neural networks and transformers were developed through extensive experimentation, guided more by intuition and analogy to human brain functions than by solid theoretical foundations.

The Role of Big Science and Big Data

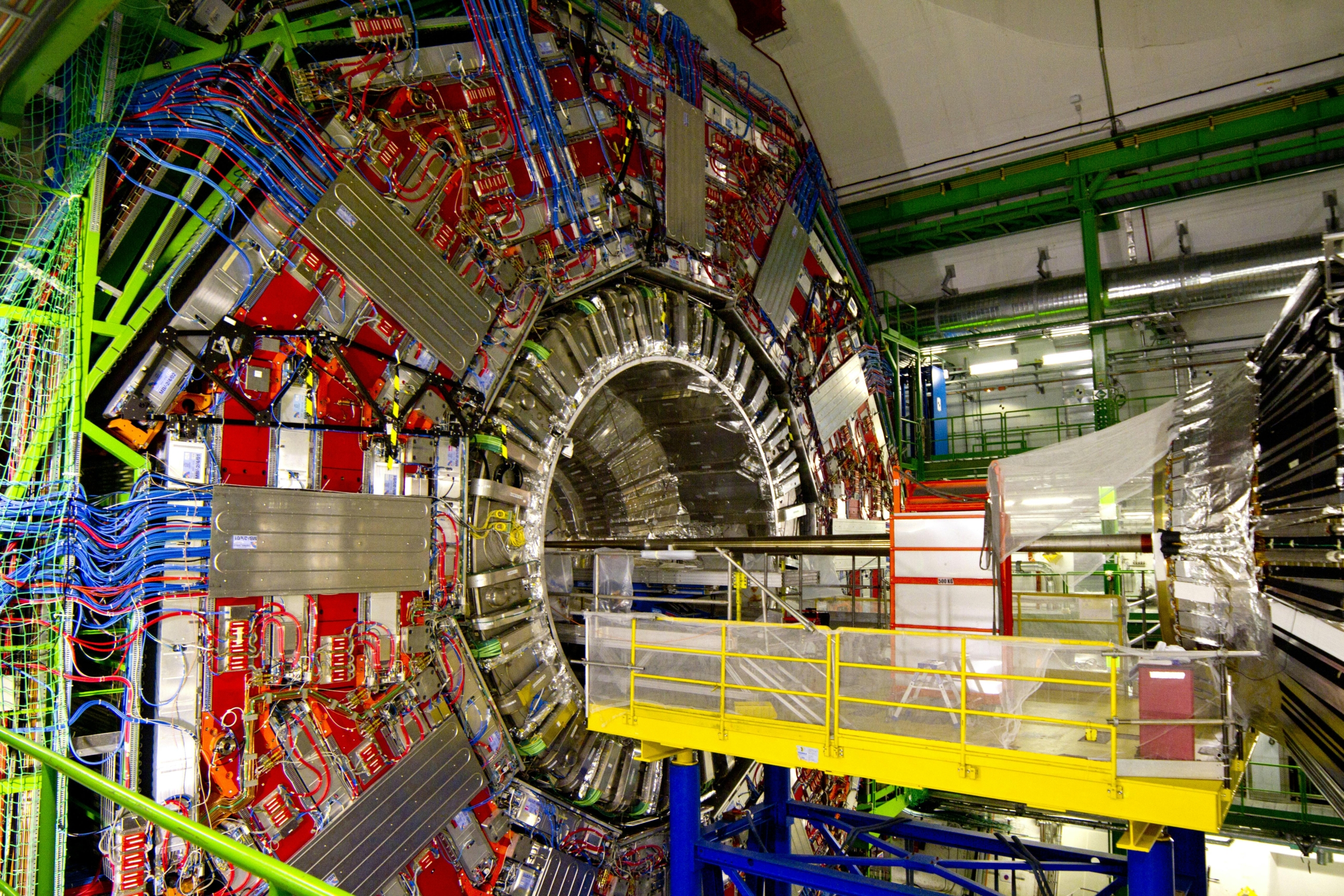

This brings us to a critical point in contemporary science: the role of massive, resource-intensive projects. Consider CERN’s Large Hadron Collider, a pinnacle of experimental physics that required about $5 billion and hundreds of years of cumulative theoretical and experimental physics to justify such societal investment. This project was predicated on strong theoretical predictions that required confirmation through cutting-edge experiments.

This brings us to a critical point in contemporary science: the role of massive, resource-intensive projects. Consider CERN’s Large Hadron Collider, a pinnacle of experimental physics that required about $5 billion and hundreds of years of cumulative theoretical and experimental physics to justify such societal investment. This project was predicated on strong theoretical predictions that required confirmation through cutting-edge experiments.

In contrast, the burgeoning field of AI, particularly generative AI, is witnessing calls for investments in data centers that could potentially cost between $2 trillion and $5 trillion. This is based on just a few years of experimental and theoretical work in machine learning. The comparison is stark. AI and machine learning are still in their experimental phase, akin to the early days of chemistry or physics, where the theoretical underpinnings are not yet robust enough to warrant such massive investment.

A Call for Theoretical Maturity in AI

We are at a juncture where the experimental costs and the demands for data and computation in AI are becoming akin to those of large-scale physics experiments. Yet, the theoretical maturity of AI does not yet match its experimental ambitions. This discrepancy poses a risk, leading to potentially wasteful investments and missed opportunities for more focused, theory-driven advancements.

Conclusion

In conclusion, as we navigate the exciting but tumultuous waters of AI development, we must heed the lessons from other disciplines. Balancing experimentation with theoretical development, understanding the right timing for investment, and ensuring that our ambitions are matched by our understanding are crucial steps toward sustainable and impactful scientific progress.

Thank you for joining me in this exploration today, and I look forward to our continued journey through the fascinating world of scientific and technological development.

Christopher White is the President at NEC Laboratories America, Inc., leading a team of world-class researchers focusing on diverse topics from sensing to networking to machine learning-based understanding. Chris has extensive expertise in scientific computing, hierarchical simulation techniques, quantum chemistry, optical networks, optical devices, and acoustic scattering.