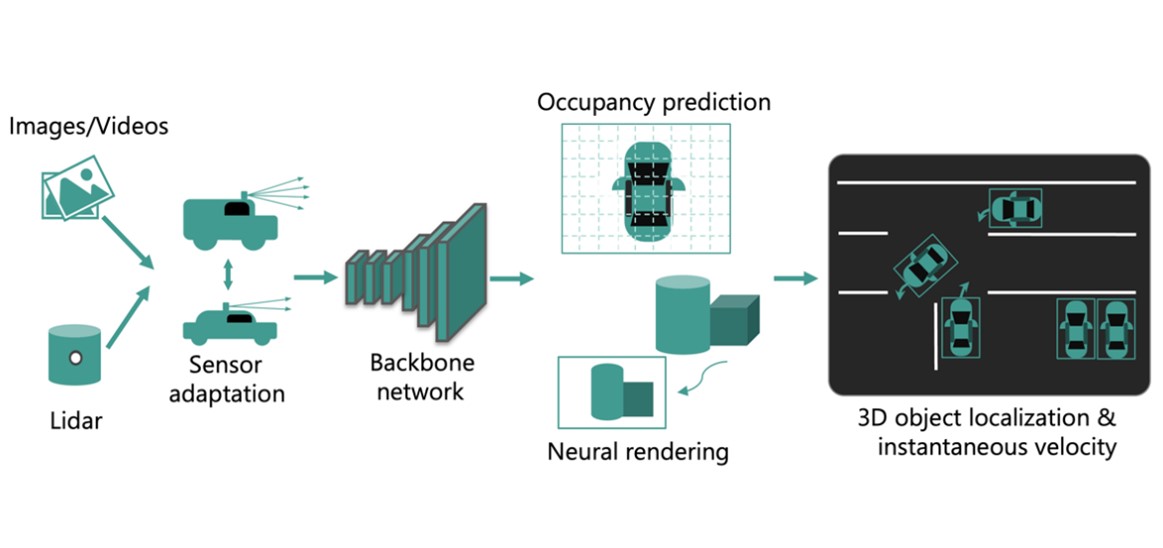

We have pioneered the development of learned bird-eye view representations for road scenes which form a basis for 3D perception using images in applications like autonomous driving. Our techniques for 3D localization of objects achieve high accuracy for object position, orientation and part locations with just a monocular camera, using novel geometric and learned priors. We have led the development of the first monocular SLAM systems for large-scale outdoor driving scenes, as well as structure from motion methods that overcome challenges due to rolling shutter cameras in high-speed applications. Our Lidar-based instantaneous motion estimation can detect subtle motions such as a car about to start merging into a driving lane, which allows early prediction of intents leading to improved safety outcomes.

Publication Tags (project tag): 3dots