MEDIA ANALYTICS

PROJECTS

PEOPLE

PUBLICATIONS

PATENTS

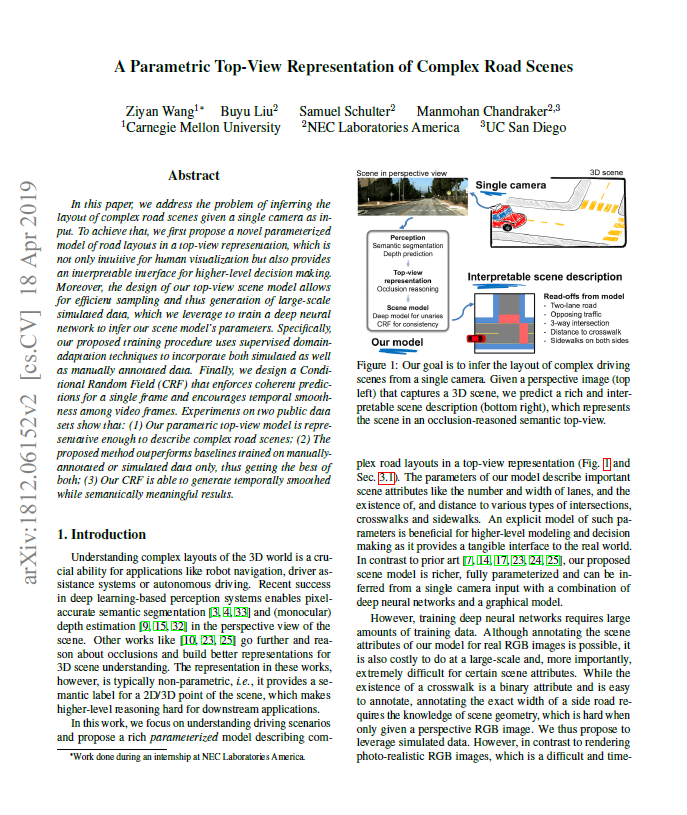

A Parametric Top-View Representation of Complex Road Scenes

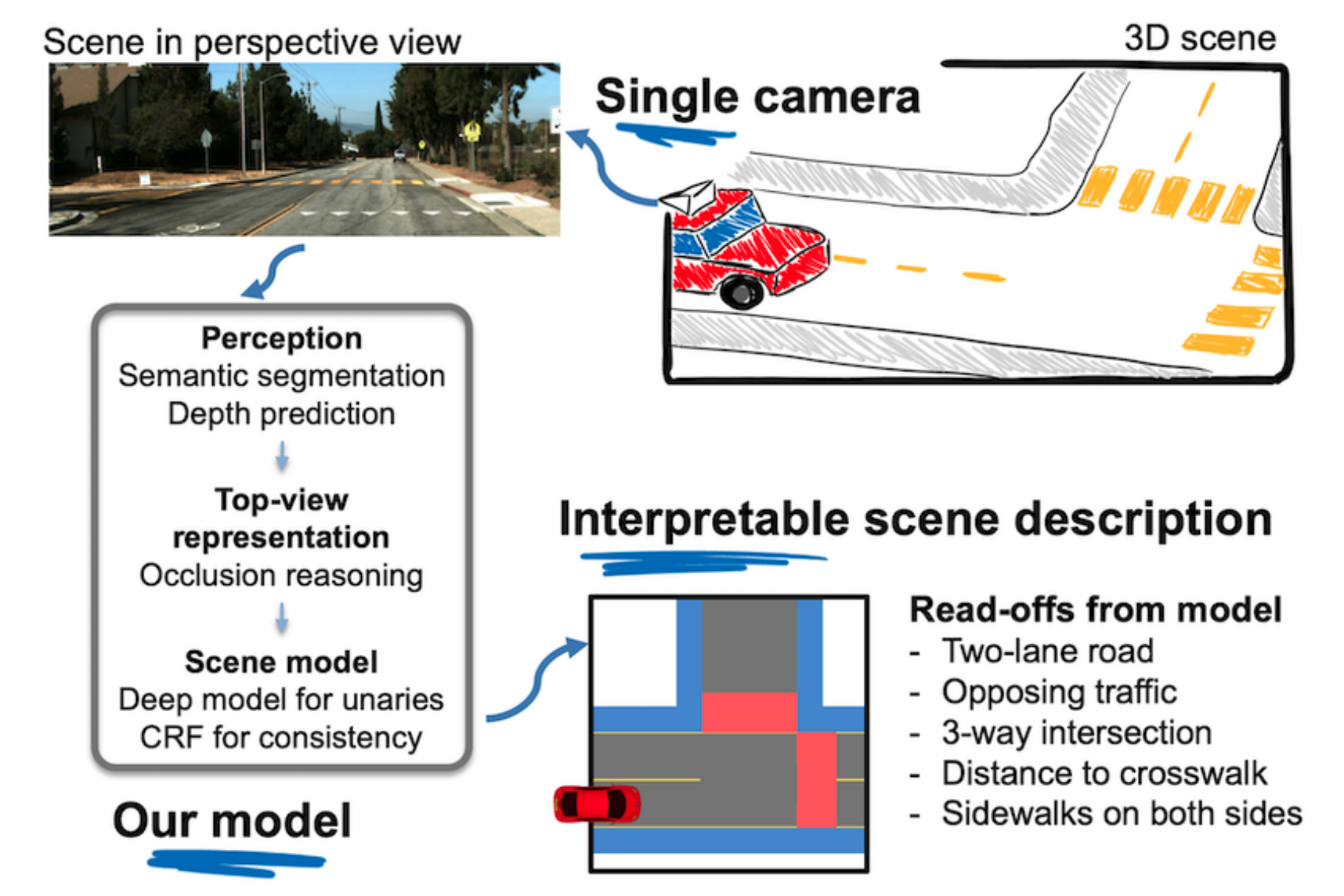

We address the problem of inferring the layout of complex road scenes given a single camera as input. We first propose a novel parameterized model of road layouts in a top-view representation, which is not only intuitive for human visualization but also provides an interpretable interface for higher-level decision making. Moreover, the design of our top-view scene model allows for efficient sampling, and thus generation, of large-scale simulated data, which we leverage to train a deep neural network to infer our scene model’s parameters. Finally, we design a conditional random field (CRF) that enforces coherent predictions for a single frame and encourages temporal smoothness among video frames.

Collaborators: Ziyan Wang, Manmohan Chandraker

Project Site

A Parametric Top-View Representation of Complex Road Scenes Paper

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2019

Download Paper [CVPR PDF] [arXiv PDF] [NEC Labs America PDF] [Bibtex]

Abstract

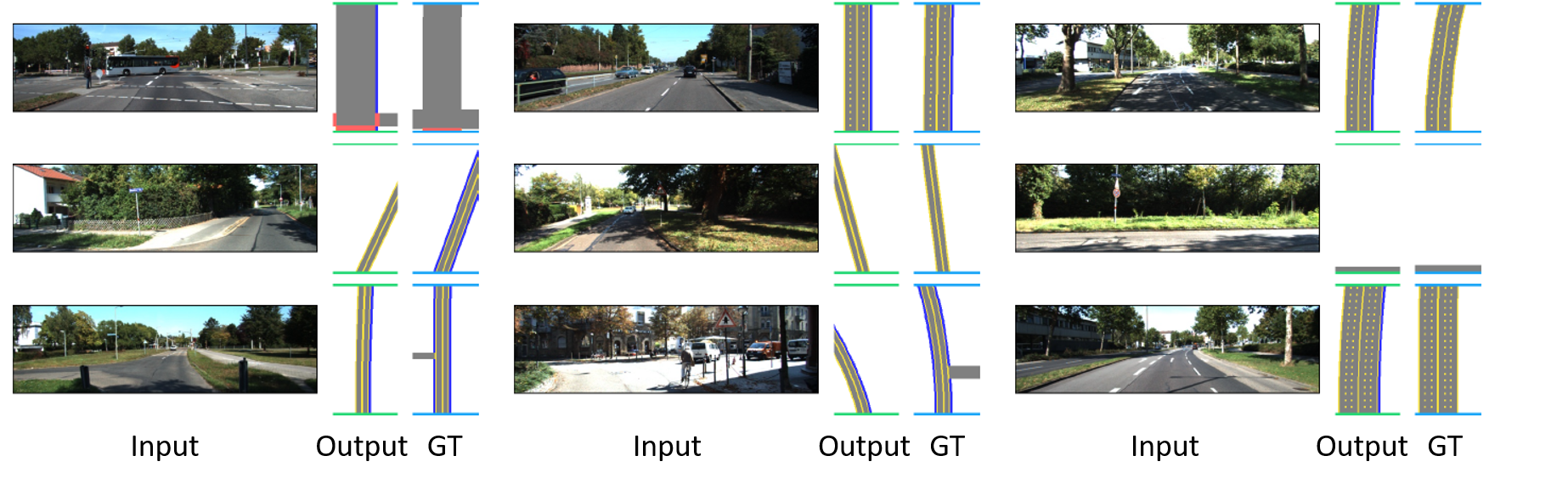

In this paper, we address the problem of inferring the layout of complex road scenes given a single camera as input. To achieve that, we first propose a novel parameterized model of road layouts in a top-view representation, which is not only intuitive for human visualization but also provides an interpretable interface for higher-level decision-making. Moreover, the design of our top-view scene model allows for efficient sampling and thus, generation of large-scale simulated data, which we leverage to train a deep neural network to infer our scene model’s parameters. Specifically, our proposed training procedure uses supervised domain adaptation techniques to incorporate both simulated as well as manually annotated data. Finally, we design a Conditional Random Field (CRF) that enforces coherent predictions for a single frame and encourages temporal smoothness among video frames. Experiments on two public data sets show that: (1) Our parametric top-view model is representative enough to describe complex road scenes; (2) The proposed method outperforms baselines trained on manually annotated or simulated data only, thus getting the best of both; (3) Our CRF is able to generate temporally smoothed while semantically meaningful results.

Dataset

Results