MEDIA ANALYTICS

PROJECTS

PEOPLE

PUBLICATIONS

PATENTS

Controllable Dynamic Multi-Task Architectures

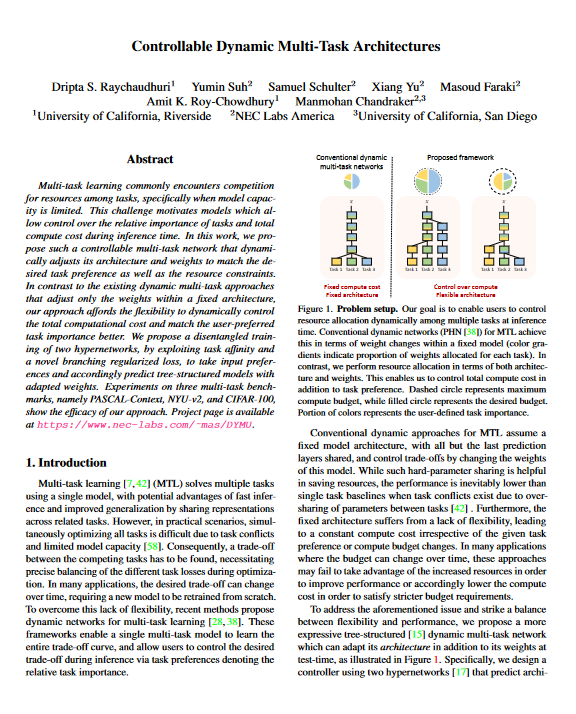

Our goal is to enable users to control resource allocation dynamically among multiple tasks at inference time. Conventional dynamic networks (PHN) for MTL achieve this in terms of weight changes within a fixed model (color gradients indicate proportion of weights allocated for each task). In contrast, we perform resource allocation in terms of both architecture and weights. This enables us to control total compute cost in addition to task preference. Dashed circle represents maximum compute budget, while filled circle represents the desired budget. Portion of colors represents the user-defined task importance.

Collaborators: Dripta S. Raychaudhuri, Amit K. Roy-Chowdhury, Manmohan Chandraker

Controllable Dynamic Multi-Task Architectures Paper

Xiang Yu2 Masoud Faraki2 Amit K. Roy-Chowdhury1

Manmohan Chandraker2,3

[Download Paper] [Paper at arXiv] [Code (coming soon)]

Abstract

Multi-task learning commonly encounters competition for resources among tasks, specifically when model capacity is limited. This challenge motivates models which allow control over the relative importance of tasks and total compute cost during inference time. In this work, we propose such a controllable multi-task network that dynamically adjusts its architecture and weights to match the desired task preference as well as the resource constraints. In contrast to the existing dynamic multi-task approaches that adjust only the weights within a fixed architecture, our approach affords the flexibility to dynamically control the total computational cost and match the user-preferred task importance better. We propose a disentangled training of two hypernetworks, by exploiting task affinity and a novel branching regularized loss, to take input preferences and accordingly predict tree-structured models with adapted weights. Experiments on three multi-task benchmarks, namely PASCAL-Context, NYU-v2, and CIFAR-100, show the efficacy of our approach.