Material Links

MEDIA ANALYTICS

PROJECTS

PEOPLE

PUBLICATIONS

PATENTS

Learning to Look Around Objects for Top-View Representations of Outdoor Scenes

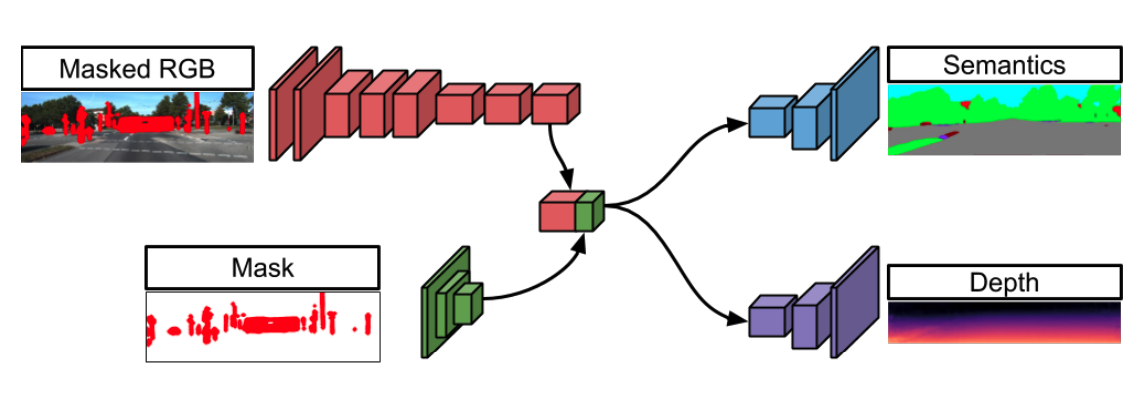

ECCV 2018 | We propose a convolutional neural network that learns to predict occluded portions of a scene layout by looking around foreground objects like cars or pedestrians. But instead of hallucinating RGB values, we show that directly predicting the semantics and depths in the occluded areas enables a better transformation into the top view. We further show that this initial top-view representation can be significantly enhanced by learning priors and rules about typical road layouts from simulated or, if available, map data. Crucially, training our model does not require costly or subjective human annotations for occluded areas or the top view, but rather uses readily available annotations for standard semantic segmentation.

Collaborators: Menghua Zhai, Nathan Jacobs, Manmohan Chandraker