MEDIA ANALYTICS

PROJECTS

PEOPLE

PUBLICATIONS

PATENTS

Object Detection With a Unified Label Space From Multiple Datasets

Collaborators: Xiangyun Zhao, Gaurav Sharma, Yi-Hsuan Tsai, Manmohan Chandraker, Ying Wu

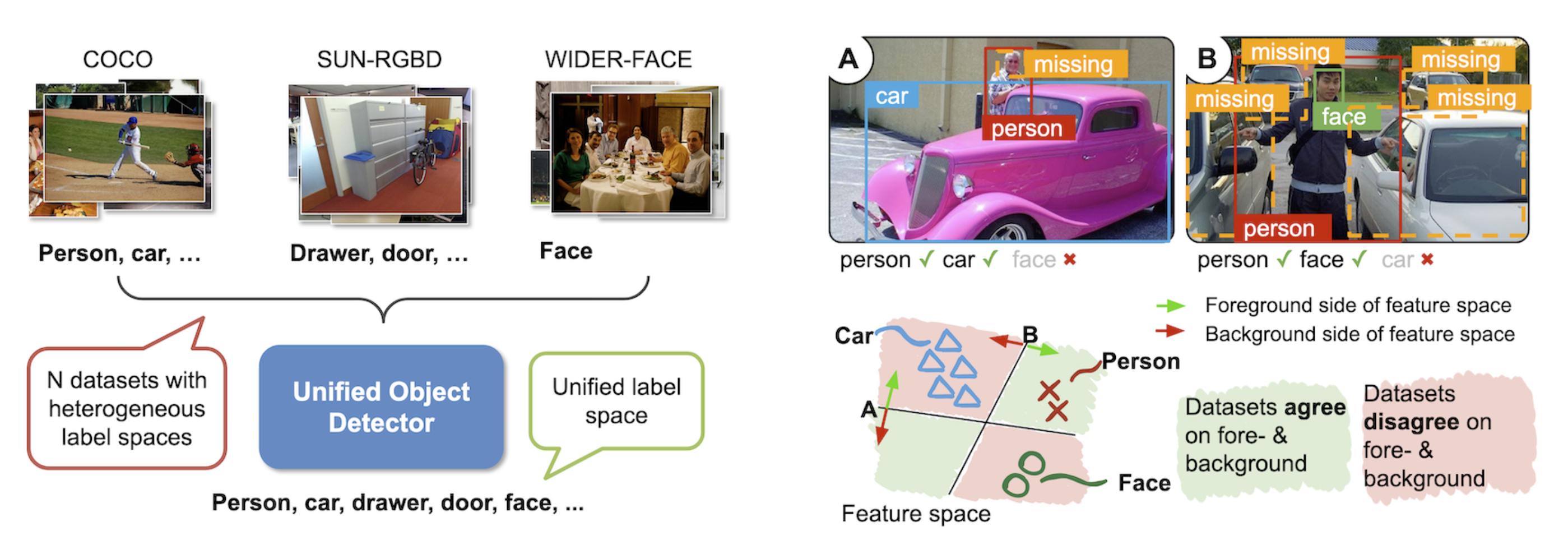

(a) We train a single object detector from multiple datasets with heterogeneous label spaces. In contrast to prior work, our model unifies the label spaces of all datasets. (b) Illustration of the ambiguity of background in object detection when training from multiple datasets with different label spaces. Here, only “person” is consistent wrt. both datasets but “car” and “face” are missing in the other one, respectively. Naïve combination of the datasets leads to wrong training signals.

Project Site

As described in the main paper, for the evaluation over the unified label space, new bounding box annotations are required. Specifically, after unifying the label space, certain datasets contain object categories that were not annotated originally. While the task we propose involves handling such missing annotations during training, we still need to evaluate the model. Thus, we collect annotations for the missing categories in all respective datasets as the validation/test sets. In order to reproduce the results reported in the paper, the new annotations for the VOC, COCO, SUN-RGBD, LISA-Signs datasets are released. In addition, we annotated the Widerface and KITTI datasets recently, and we also release them for future research.

An example from the LISA-Signs dataset. In the original annotations, only traffic signs are annotated. All other categories are newly annotated.

Object Detection With a Unified Label Space From Multiple Datasets Paper

Xiangyun Zhao1, Samuel Schulter2, Gaurav Sharma2, Yi-Hsuan Tsai2,

Manmohan Chandraker2;3, Ying Wu1

1Northwestern University 2NEC Labs America 3UC San Diego

In European Conference on Computer Vision (ECCV) 2020

Material Links

[Download Paper] [Download Supplementary Paper] [PDF] [Supp] [Bibtex]

Abstract

Given multiple datasets with different label spaces, the goal of this work is to train a single object detector predicting over the union of all the label spaces. The practical benefits of such an object detector are obvious and significant—application-relevant categories can be picked and merged form arbitrary existing datasets. However, naïve merging of datasets is not possible in this case, due to inconsistent object annotations. Consider an object category like faces that is annotated in one dataset, but is not annotated in another dataset, although the object itself appears in the latter’s images. Some categories, like face here, would thus be considered foreground in one dataset, but background in another. To address this challenge, we design a framework which works with such partial annotations, and we exploit a pseudo-labeling approach that we adapt for our specific case. We propose loss functions that carefully integrate partial but correct annotations with complementary but noisy pseudo labels. Evaluation in the proposed novel setting requires full annotation on the test set. We collect the required annotations1 and define a new challenging experimental setup for this task based on existing public datasets. We show improved performances compared to competitive baselines and appropriate adaptations of existing work.