MEDIA ANALYTICS

PROJECTS

PEOPLE

PUBLICATIONS

PATENTS

UniSeg: Learning Semantic Segmentation from Multiple Datasets with Label Shifts

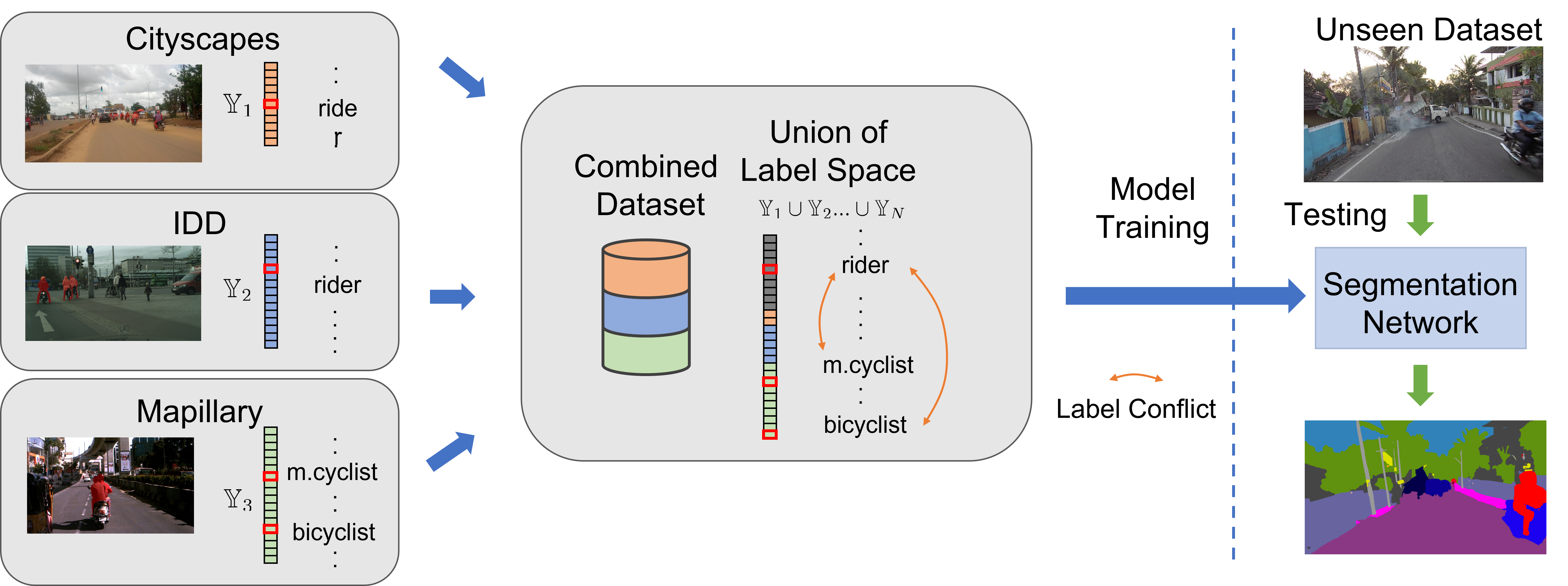

We work on the problem of multi-dataset semantic segmentation, where each dataset has a different label space. We show that directly combining all the datasets and train the model would result in a gradient conflict issue when there is a label conflict in the unified label space. Such an issue could impact the testing time when inputting an image from an unseen dataset to the model. For example, the rider in the right image can be considered as both the “rider” or the “motorcyclist” categories in the unified space. Therefore, it is of great importance to develop a method that considers such label conflict during the training process.

Collaborators: Dongwan Kim, Yi-Hsuan Tsai, Sparsh Garg, Manmohan Chandraker & Bohyung Han

Learning Semantic Segmentation from Multiple Datasets with Label Shifts Paper

Abstract

With increasing applications of semantic segmentation, numerous datasets have been proposed in the past few years. Yet labeling remains expensive, thus, it is desirable to jointly train models across aggregations of datasets to enhance data volume and diversity. However, label spaces differ across datasets and may even be in conflict with one another. This paper proposes UniSeg, an effective approach to automatically train models across multiple datasets with differing label spaces, without any manual relabeling efforts. Specifically, we propose two losses that account for conflicting and co-occurring labels to achieve better generalization performance in unseen domains. First, a gradient conflict in training due to mismatched label spaces is identified and a class-independent binary cross-entropy loss is proposed to alleviate such label conflicts. Second, a loss function that considers class-relationships across datasets is proposed for a better multi-dataset training scheme. Extensive quantitative and qualitative analyses on road-scene datasets show that UniSeg improves over multi-dataset baselines, especially on unseen datasets, e.g., achieving more than 8\% gain in IoU on KITTI. Lastly, UniSeg achieves 39.4\% IoU on the WildDash2 public benchmark, which is one of the strongest submissions in the zero-shot setting.