Link to: Media Analytics

MEDIA ANALYTICS

PROJECTS

Link to: MA People

PEOPLE

Link to: MA Publications

PUBLICATIONS

Link to: MA Patents

PATENTS

Degeneracy in Self-Calibration Revisited & a Deep Learning Solution for Uncalibrated SLAM

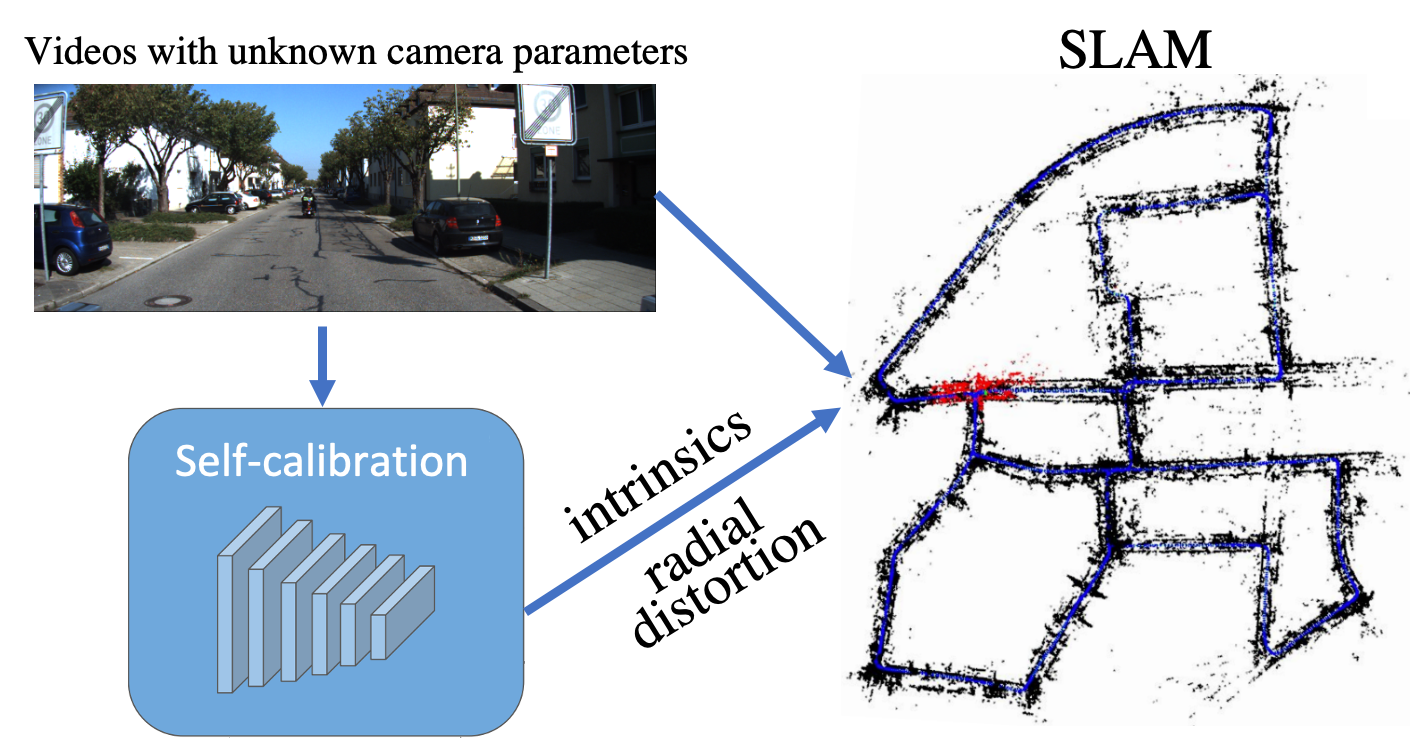

We first revisit the geometric approach to radial distortion self-calibration and provide a proof that explicitly shows the ambiguity between radial distortion and scene depth under forward camera motion. In view of such geometric degeneracy and the prevalence of forward motion in practice, we further propose a learning approach that trains a convolutional neural network on a large amount of synthetic data to estimate the camera parameters and show its application to SLAM without knowing camera parameters prior.

Collaborators: Manmohan Chandraker

Degeneracy in Self-Calibration Revisited and a Deep Learning Solution for Uncalibrated SLAM Paper

1National University of Singapore 2NEC Labs America 3University of California, San Diego

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019 [Oral]

From a set of calibrated images with known camera intrinsics and no radial distortion, we generate synthetic uncalibrated images for training our network by randomly adjusting camera intrinsics and randomly adding radial distortion. At testing, given a single real uncalibrated image, our network predicts accurate camera parameters, which are used for producing the calibrated image.

Abstract

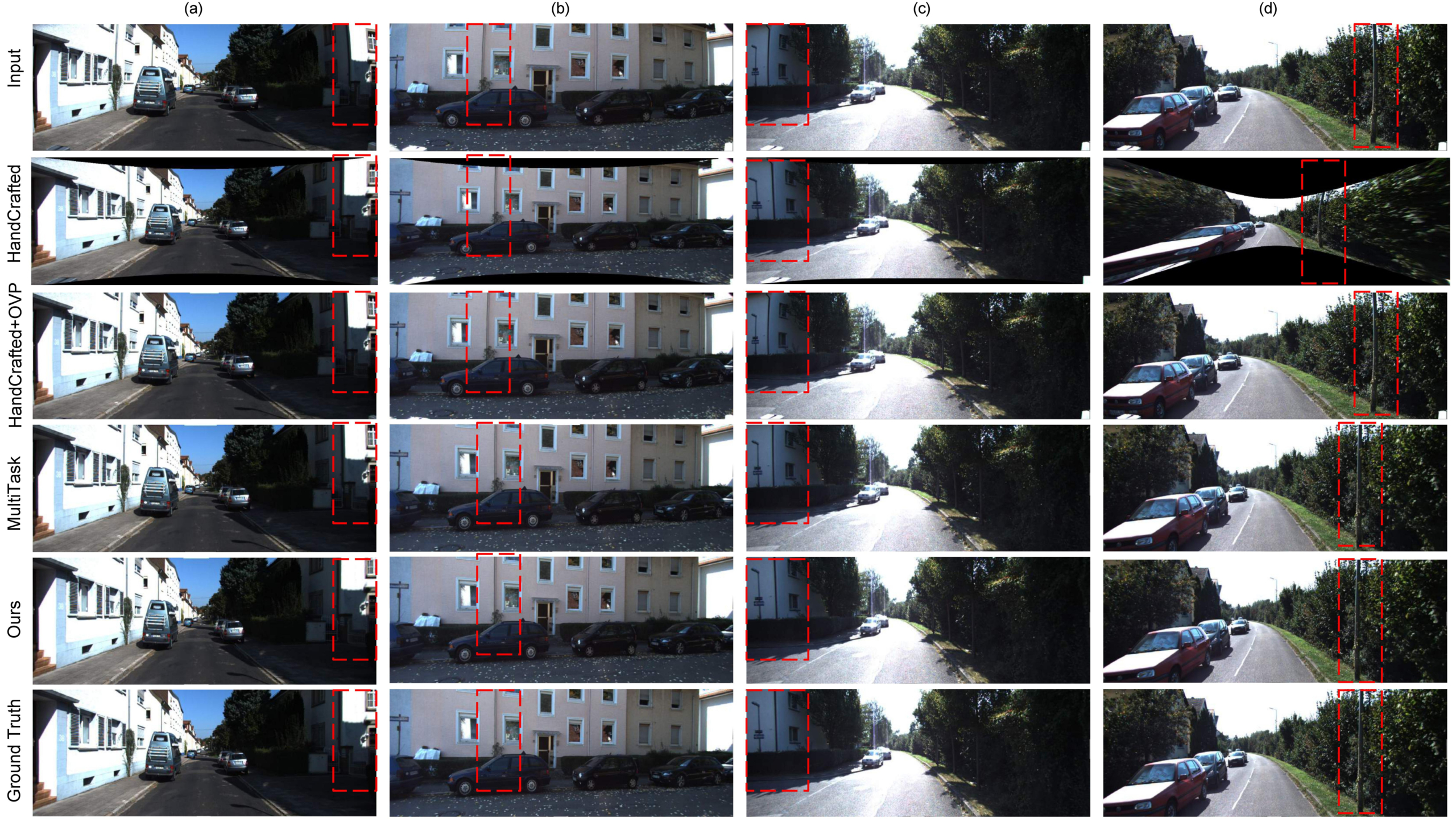

Self-calibration of camera intrinsics and radial distortion has a long history of research in the computer vision community. However, it remains rare to see real applications of such techniques to modern Simultaneous Localization And Mapping (SLAM) systems, especially in driving scenarios. In this paper, we revisit the geometric approach to this problem, and provide a theoretical proof that explicitly shows the ambiguity between radial distortion and scene depth when two-view geometry is used to self-calibrate the radial distortion. In view of such geometric degeneracy, we propose a learning approach that trains a convolutional neural network (CNN) on a large amount of synthetic data. We demonstrate the utility of our proposed method by applying it as a checkerboard-free calibration tool for SLAM, achieving comparable or superior performance to previous learning and hand-crafted methods.

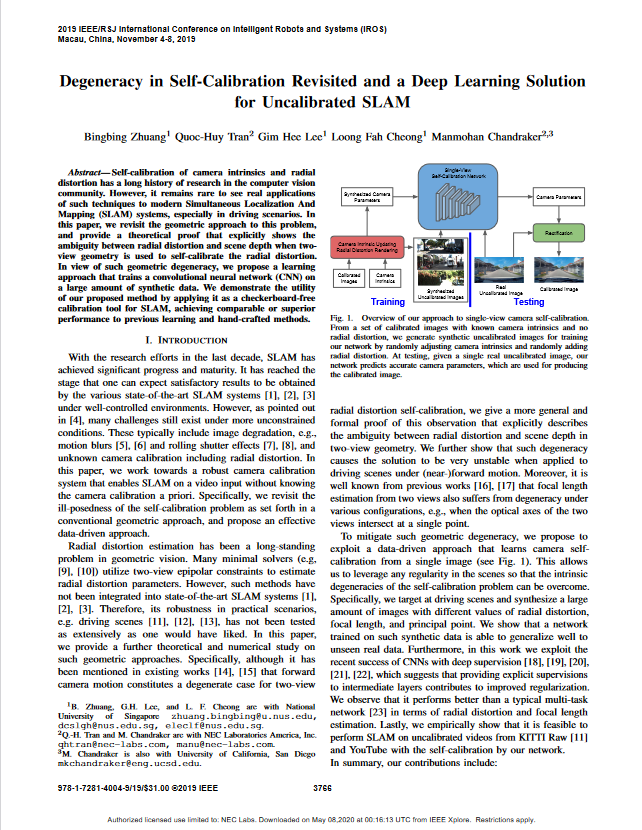

Geometric Approach vs. Learning Approach in Near-Degenerate Setting

Radial distortion estimation by geometric and learning approaches. (a) Radial distortion estimates. (b) PDF of radial distortion estimates.

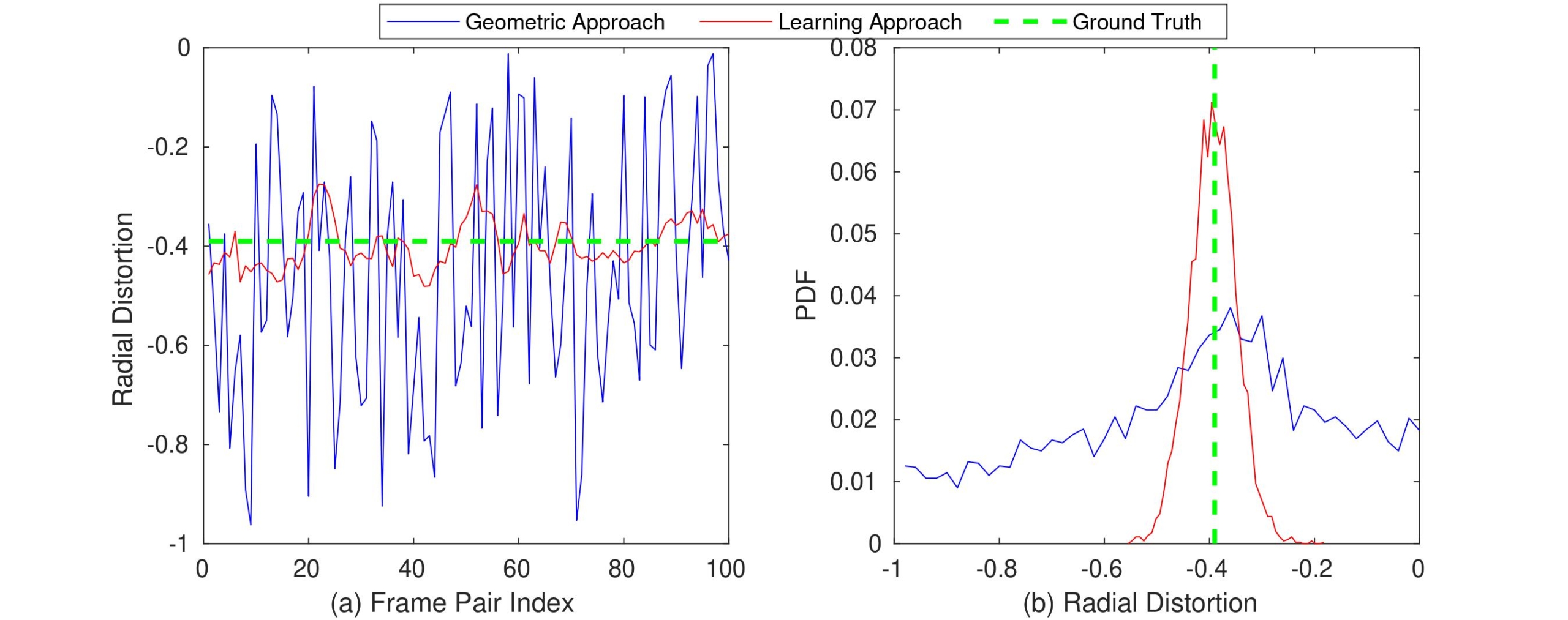

Single-View Self-Calibration Results

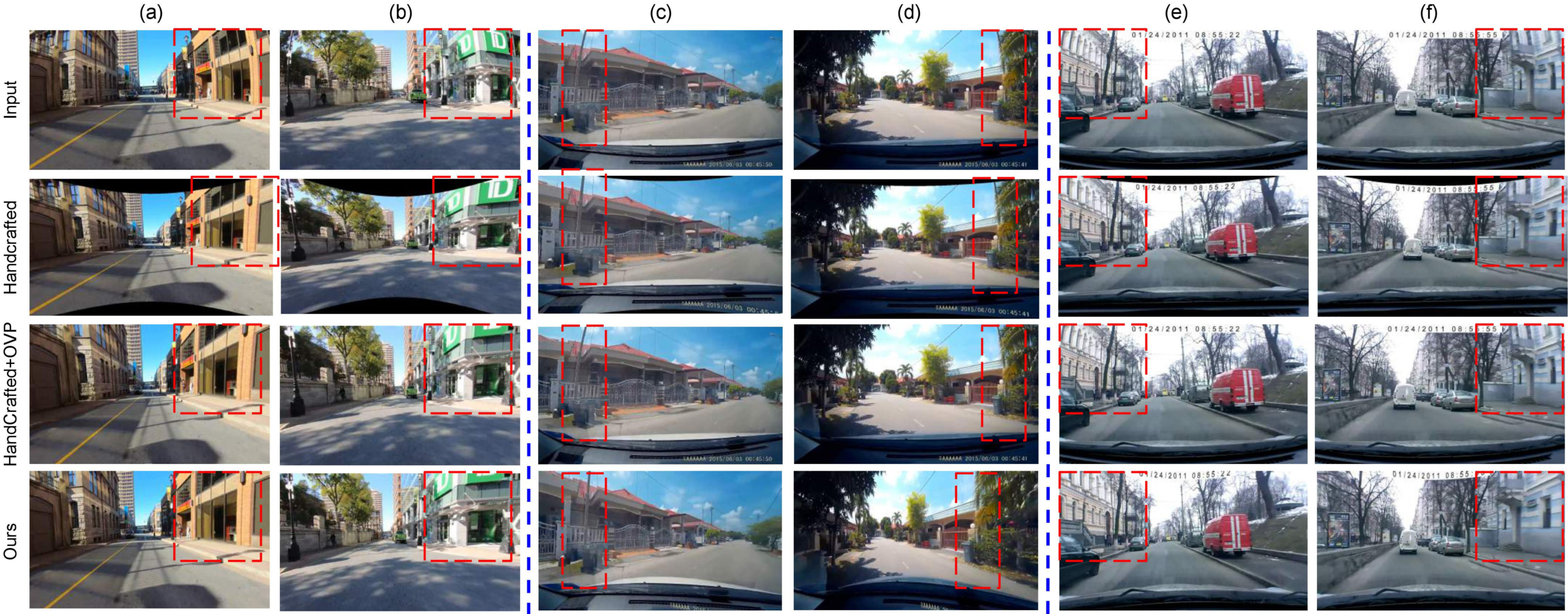

Qualitative results of single-view camera self-calibration on the KITTI Raw test sequence.

Qualitative results of single-view camera self-calibration on YouTube test sequences. (a)-(b) Sequence 1. (c)-(d) Sequence 2. (e)-(f) Sequence 3.

Uncalibrated SLAM Results

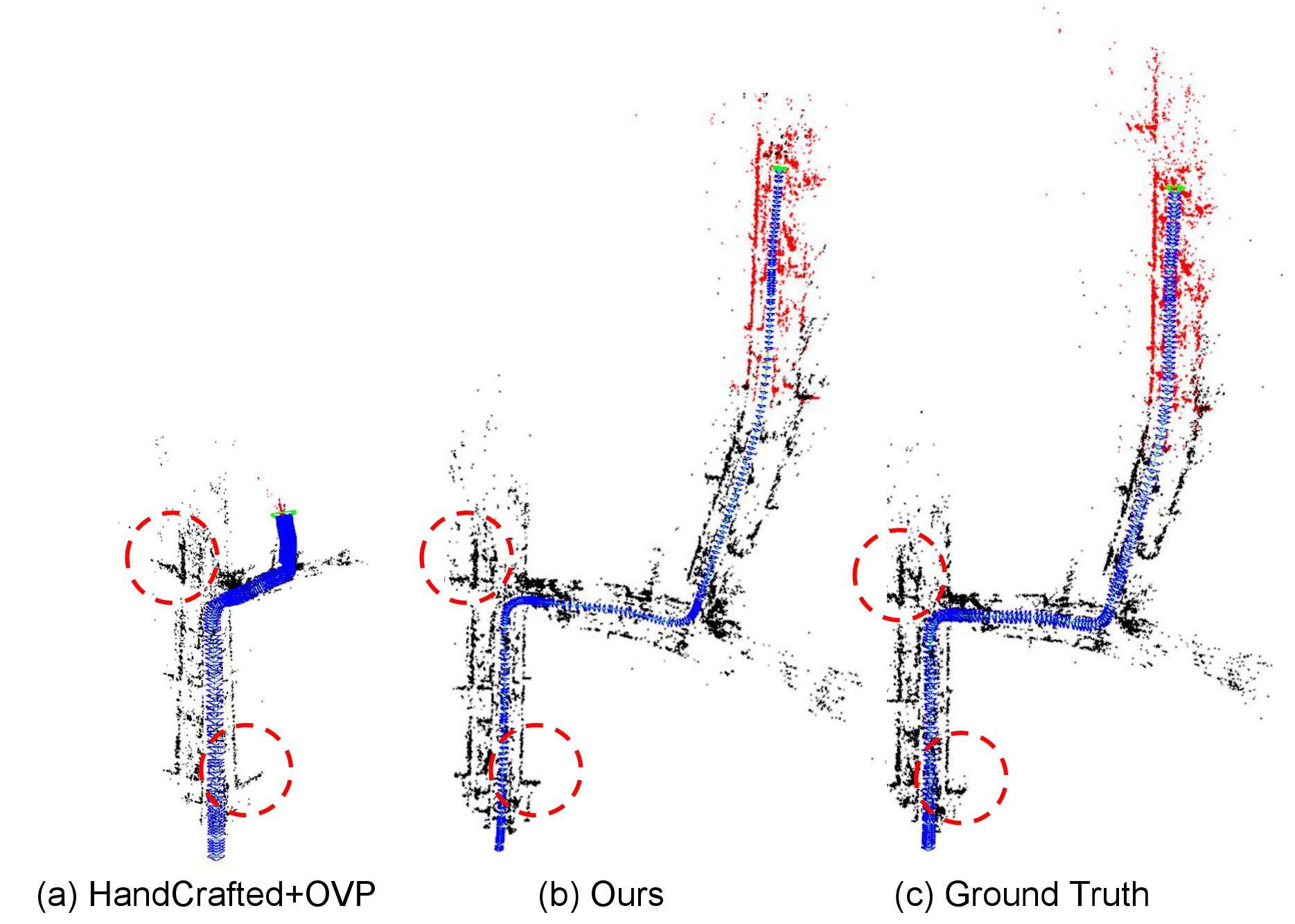

Qualitative results of uncalibrated SLAM on the first 400 frames of the KITTI Raw test sequence. Red points denote the latest local map.

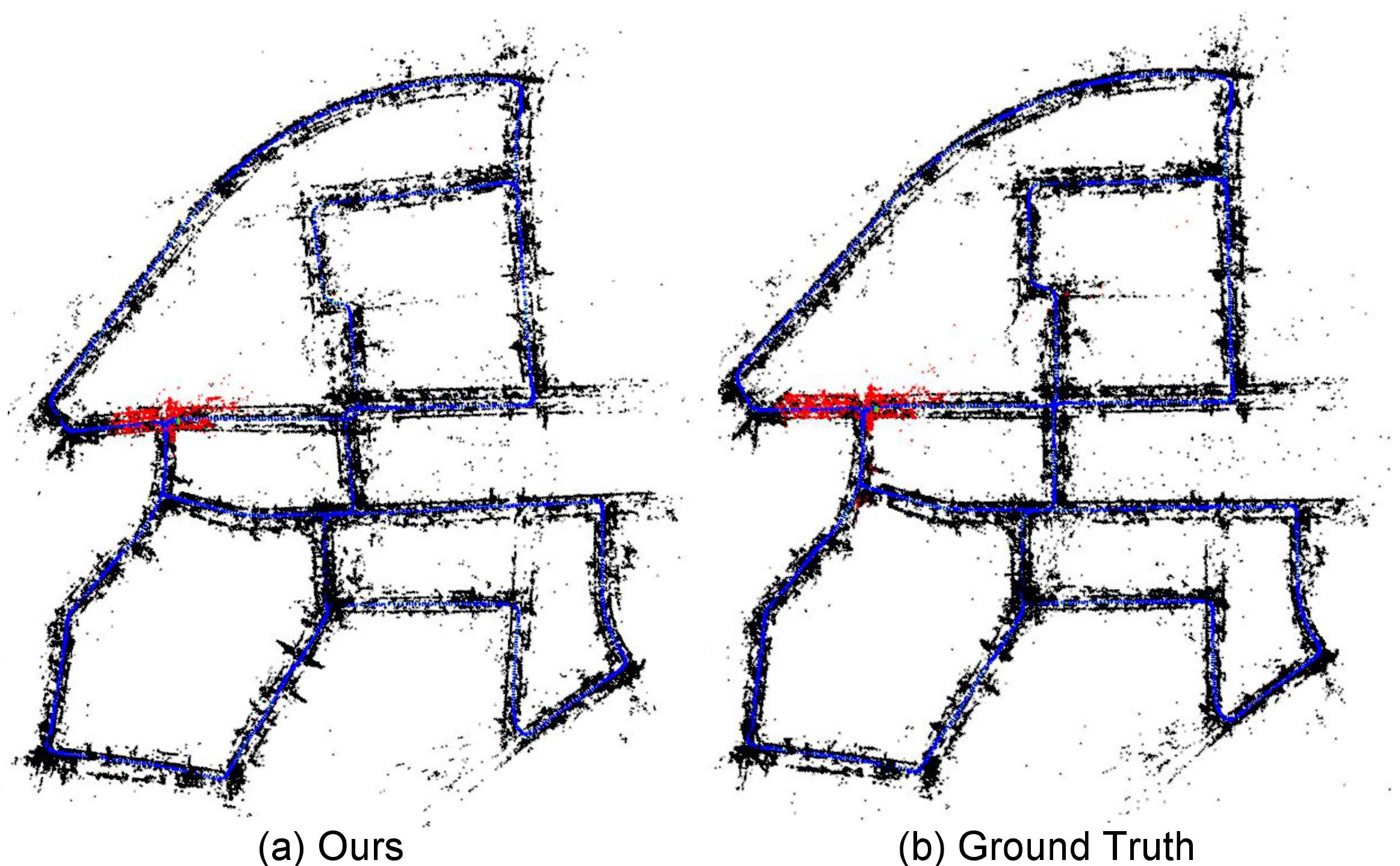

Qualitative results of uncalibrated SLAM on the entire KITTI Raw test sequence. Red points denote the latest local map.

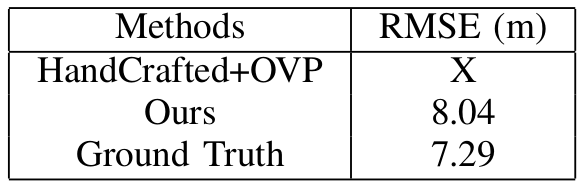

Quantitative comparisons in terms of median RMSE of keyframe trajectory over 5 runs on the entire KITTI Raw test sequence. X denotes that `HandCrafted+OVP’ breaks ORB-SLAM in all 5 runs.