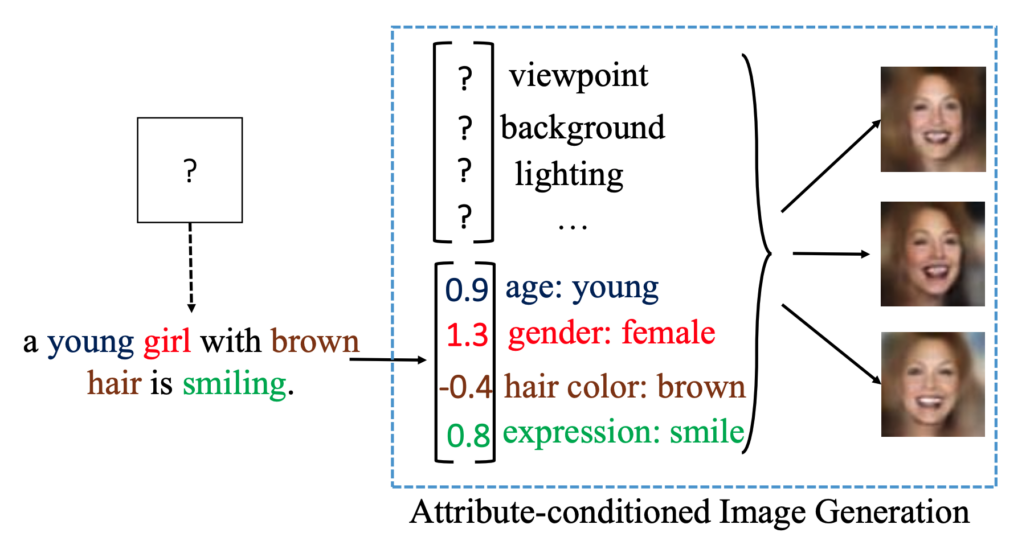

Attribute2Image: Conditional Image Generation From Visual Attributes

Publication Date: December 2, 2015

Publication Date: December 2, 2015

Event: ECCV 2016, The 14th European Conference on Computer Vision (2016)

Reference: 1512.00570v2

Authors: Xinchen Yan, Jimei Yang, Kihyuk Sohn, Honglak Lee

Abstract: This paper investigates a novel problem of generating images from visual attributes. We model the image as a composite of foreground and background and develop a layered generative model with disentangled latent variables that can be learned end-to-end using a variational auto-encoder. We experiment with natural images of faces and birds and demonstrate that the proposed models are capable of generating realistic and diverse samples with disentangled latent representations. We use a general energy minimization algorithm for posterior inference of latent variables given novel images. Therefore, the learned generative models show excellent quantitative and visual results in the tasks of attribute-conditioned image reconstruction and completion.

Publication Link: https://arxiv.org/abs/1512.00570