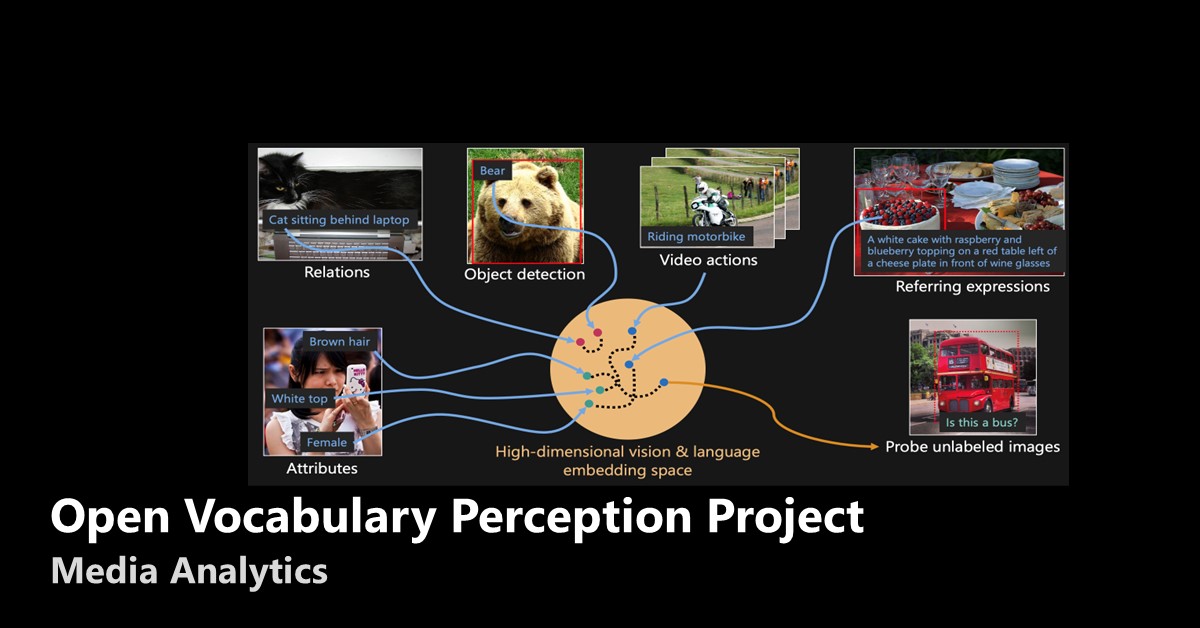

Perception methods such as object detection and image segmentation form a basic building block of most computer vision applications. We develop open vocabulary perception methods that combine the power of vision and language to provide rich descriptions of objects in scenes, including their attributes, behaviors, relations and interactions. Our open vocabulary detectors can even detect objects which have never been seen in training, by mapping visual and language concepts in a shared embedding space. Besides expanding the universality of visual perception, our industry-leading ability to produce rich descriptions allows better decision-making in downstream applications. Our perception methods rely on advances in vision transformers to achieve high accuracy, together with high speed through efficient design choices that allow multi-scale computation while limiting the cost of self-attention.

Team Member: Abhishek Aich, Vijay Kumar BG

Publication Tags: machine learning, computer vision, object detection, language based object detection, open vocabulary