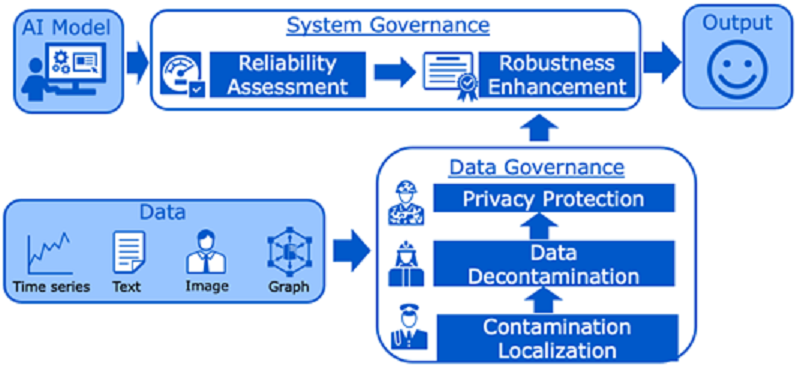

This project aims to develop innovative system testing and data governance engines to identify AI system vulnerabilities, defend against advanced attacks, improve technical robustness, and mitigate technical unfairness/biases. Our engines leverage fine-grained reliability assessment, generalized robustness enhancement, and hardware-based privacy protection techniques to secure both AI model and data throughout the system development life cycle.

Since AI is being applied to more domains for mission-critical tasks, our engines can offer “AI System Testing and Data Governance” as a service to make businesses in these domains more reliable, trustworthy, transparent, and secure. We are developing new solutions to support a variety of AI systems with different types of data, thus can be applied to an enormous variety of businesses, including autonomous driving, biometric authentication, finance, healthcare, IoT devices, smart factories, and more.

Team Members: Wei Cheng, Zhengzhang Chen, Haifeng Chen

Publication Tags: AI, artificial intelligence