NeurIPS 2025 in San Diego from November 30th to December 5th, 2025

NEC Laboratories America was proud to participate in the Conference on Neural Information Processing Systems (NeurIPS 2025), showcasing breakthrough research at the intersection of AI, optimization, sensing, and large-scale systems. Our contributions highlighted our commitment to advancing fundamental science while delivering innovations that translate into real-world impact, from next-generation machine learning theory to powerful applications that strengthen industry and society.

Due to overwhelming interest, NeurIPS 2025 was held in two locations. At the Hilton Mexico City Reforma in Mexico City from Sunday, November 30, through Friday, December 5, 2025, and at the San Diego Convention Center in San Diego from Tuesday, December 2, through Sunday, December 7, 2025. We only participated in San Diego.

Conference Presentations

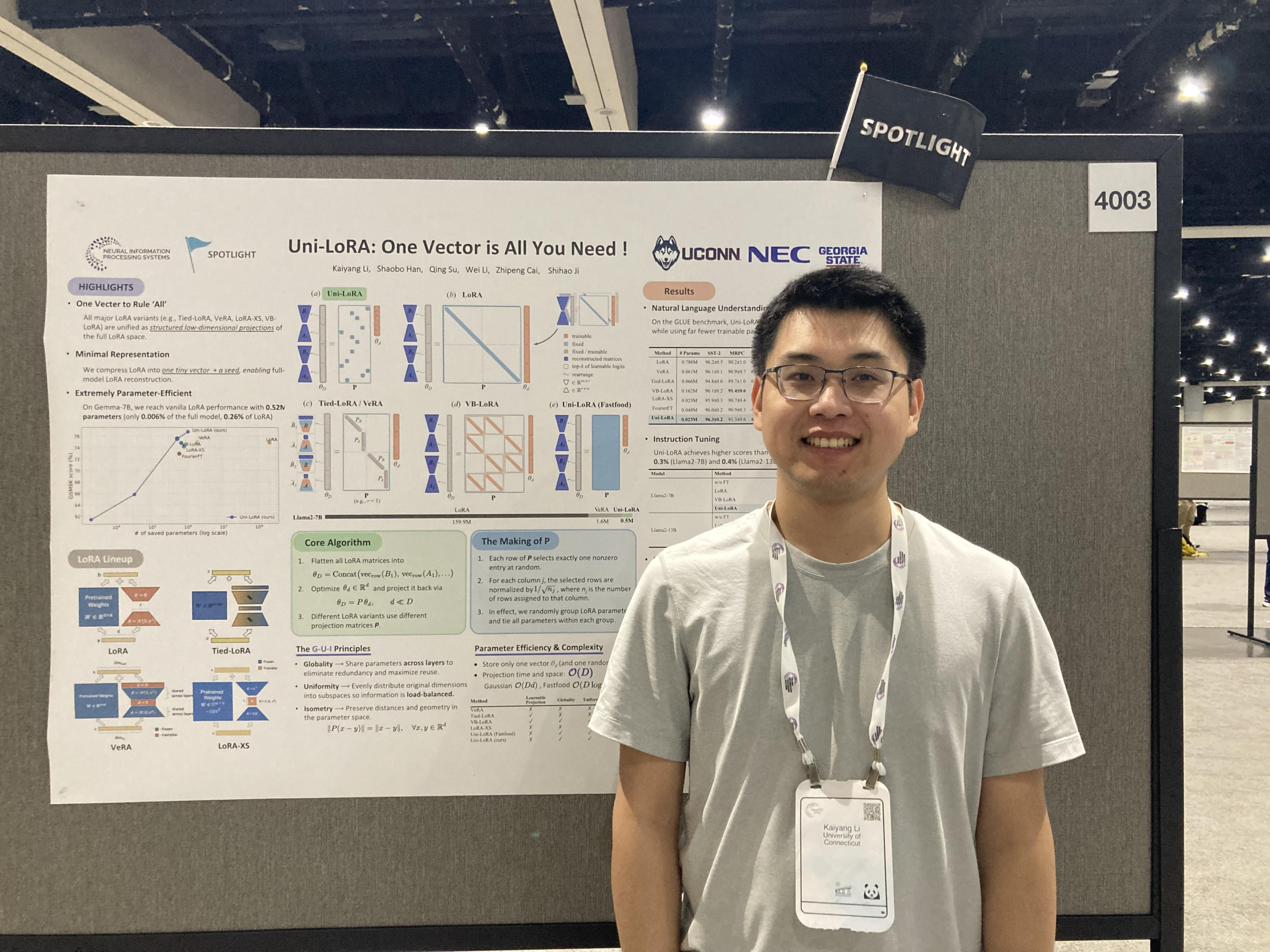

Uni-LoRA: One Vector is All You Need

Spotlight Poster Presentation

December 5, 2025

Shaobo Han

Optical Networking & Sensing

Abstract: Low-Rank Adaptation (LoRA) has become the de facto parameter-efficient finetuning (PEFT) method for large language models (LLMs) by constraining weight updates to low-rank matrices. Recent works such as Tied-LoRA, VeRA, and VBLoRA push efficiency further by introducing additional constraints to reduce the trainable parameter space. In this paper, we show that the parameter space reduction strategies employed by these LoRA variants can be formulated within a unified framework, Uni-LoRA, where the LoRA parameter space, flattened as a high-dimensional vector space R^D, can be reconstructed through a projection from a subspace R^d, with d ≪ D. We demonstrate that the fundamental difference among various LoRA methods lies in the choice of the projection matrix, P ∈ R D×d. Most existing LoRA variants rely on layer-wise or structure-specific projections that limit cross-layer parameter sharing, thereby compromising parameter efficiency. In light of this, we introduce an efficient and theoretically grounded projection matrix that is isometric, enabling global parameter sharing and reducing computation overhead. Furthermore, under the unified view of Uni-LoRA, this design requires only a single trainable vector to reconstruct LoRA parameters for the entire LLM – making UniLoRA both a unified framework and a “one-vector-only” solution. Extensive experiments on GLUE, mathematical reasoning, and instruction tuning benchmarks demonstrate that Uni-LoRA achieves state-of-the-art parameter efficiency while outperforming or matching prior approaches in predictive performance.

Paper: https://arxiv.org/abs/2506.00799

Our code is available at https://github.com/KaiyangLi1992/Uni-LoRA

Authors: Kaiyang Li, University of Connecticut; Shaobo Han, NEC Labs America; Qing Su, University of Connecticut; Wei Li, Georgia State University; Zhipeng Cai, Georgia State University; Shihao Ji, University of Connecticut.

Location: Exhibit Hall C,D,E #4003

Date and Time: Friday, December 5th from 4:30 p.m. PST — 7:30 p.m. PST.

Learn more: https://neurips.cc/virtual/2025/loc/san-diego/poster/116600

Human Texts Are Outliers: Detecting LLM-generated Texts via Out-of-distribution Detection

Poster Presentation

December 3, 2025

Wei Cheng

Data Science & System Security

Abstract: The rapid advancement of large language models (LLMs) such as ChatGPT, DeepSeek, and Claude has significantly increased the presence of AI-generated text in digital communication. This trend has heightened the need for reliable detection methods to distinguish between human-authored and machine-generated content. Existing approaches both zero-shot methods and supervised classifiers largely conceptualize this task as a binary classification problem, often leading to poor generalization across domains and models. In this paper, we argue that such a binary formulation fundamentally mischaracterizes the detection task by assuming a coherent representation of human-written texts. In reality, human texts do not constitute a unified distribution, and their diversity cannot be effectively captured through limited sampling. This causes previous classifiers to memorize observed OOD characteristics rather than learn the essence of `non-ID’ behavior, limiting generalization to unseen human-authored inputs. Based on this observation, we propose reframing the detection task as an out-of-distribution (OOD) detection problem, treating human-written texts as distributional outliers while machine-generated texts are in-distribution (ID) samples.

Authors: Cong Zeng* MBZUAI, Shengkun Tang* MBZUAI, Yuanzhou Chen UCLA, Zhiqiang Shen MBZUAI, Wenchao Yu, NEC Laboratories America, Xujiang Zhao NEC Laboratories America, Haifeng Chen. NEC Laboratories America, Wei Cheng, NEC Laboratories America, Zhiqiang Xu, MBZUAI. *primary authors.

Location: Exhibit Hall C,D,E #1306

Date and Time: Wednesday, December 3rd from 4:30 p.m. — 7:30 p.m. PST

Session: NEC TR #: 2025-TR137

Learn more: https://neurips.cc/virtual/2025/loc/san-diego/poster/120309

iFinder: Structured Zero-Shot Vision-Based LLM Grounding for Dash-Cam Video Reasoning

Poster Presentation

December 3, 2025

Abhishek Aich

Media Analytics

Abstract: Grounding large language models (LLMs) in domain-specific tasks like post-hoc dash-cam driving video analysis is challenging due to their general-purpose training and lack of structured inductive biases. As vision is often the sole modality available for such analysis (i.e., no LiDAR, GPS, etc.), existing video-based vision-language models (V-VLMs) struggle with spatial reasoning, causal inference, and explainability of events in the input video. To this end, we introduce iFinder, a structured semantic grounding framework that decouples perception from reasoning by translating dash-cam videos into a hierarchical, interpretable data structure for LLMs. iFinder operates as a modular, training-free pipeline that employs pretrained vision models to extract critical cues—object pose, lane positions, and object trajectories—which are hierarchically organized into frame- and video-level structures. Combined with a three-block prompting strategy, it enables step-wise, grounded reasoning for the LLM to refine a peer V-VLM’s outputs and provide accurate reasoning. Evaluations on four public dash-cam video benchmarks show that iFinder’s proposed grounding with domain-specific cues—especially object orientation and global context—significantly outperforms end-to-end V-VLMs on four zero-shot driving benchmarks, with up to 39% gains in accident reasoning accuracy. By grounding LLMs with driving domain-specific representations, iFinder offers a zero-shot, interpretable, and reliable alternative to end-to-end V-VLMs for post-hoc driving video understanding.

Authors: Manyi Yao, University of California, Riverside; Bingbing Zhuang, NEC Laboratories America; Sparsh Garg, NEC Laboratories America; Amit Roy-Chowdhury, University of California, Riverside; Christian Shelton, University of California, Riverside; Manmohan Chandraker, NEC Laboratories America; University of California, San Diego; Abhishek Aich, NEC Laboratories America.

Location: Exhibit Hall C,D,E #4804

Date and Time: Wednesday, December 3rd from 4:30 p.m. PST — 7:30 p.m. PST

Learn more: https://neurips.cc/virtual/2025/loc/san-diego/poster/116305

SolverLLM: Leveraging Test-Time Scaling for Optimization Problem via LLM-Guided Search

Poster Presentation

December 4

Xujiang Zhao

Data Science & System Security

Abstract: Large Language Models (LLMs) offer promising capabilities for tackling complex reasoning tasks, including optimization problems. However, existing methods either rely on prompt engineering, which leads to poor generalization across problem types, or require costly supervised training. We introduce SolverLLM, a training-free framework that leverages test-time scaling to solve diverse optimization problems. Rather than solving directly, SolverLLM generates mathematical formulations and translates them into solver-ready code, guided by a novel Monte Carlo Tree Search (MCTS) strategy. To enhance the search process, we modify classical MCTS with (1) dynamic expansion for adaptive formulation generation, (2) prompt backpropagation to guide exploration via outcome-driven feedback, and (3) uncertainty backpropagation to incorporate reward reliability into decision-making. Experiments on six standard benchmark datasets demonstrate that SolverLLM outperforms both prompt-based and learning-based baselines, achieving strong generalization without additional training.

Authors: Dong Li, Baylor University; Xujiang Zhao*, NEC Labs America; Linlin Yu, Augusta University; Yanchi Liu, NEC Labs America; Wei Cheng, NEC Labs America; Zhengzhang Chen, NEC Labs America; Zhong Chen, Southern Illinois University; Feng Chen, University of Texas at Dallas; Chen Zhao*, Baylor University; Haifeng Chen, NEC Labs America. *Chen Zhao and Xujiang Zhao are corresponding authors.

Location: Exhibit Hall C,D,E #2011

Date and Time: Thursday, December 4th, from 4:30 p.m. — 7:30 p.m. PST

Session: NEC TR #: 2025-TR128

Learn more: https://neurips.cc/virtual/2025/poster/116215

DISC: Dynamic Decomposition Improves LLM Inference Scaling

Poster Presentation

December 5, 2025

Wei Cheng

Data Science & System Security

Abstract: Inference scaling methods often rely on decomposing problems into steps, followed by sampling and selecting the best next steps. However, these steps and their sizes are typically fixed or depend on domain knowledge. We propose dynamic decomposition, a method that adaptively and automatically breaks down solution and reasoning traces into manageable steps during inference. By allocating compute more effectively, particularly by subdividing challenging steps and sampling them more frequently dynamic decomposition significantly enhances inference efficiency. Experiments on benchmarks such as APPS, MATH, and LiveCodeBench demonstrate that dynamic decomposition outperforms static approaches, including token-level, sentence-level, and single-step decompositions. These findings highlight the potential of dynamic decomposition to improve a wide range of inference scaling techniques.

Authors: Jonathan Light, Rensselaer Polytechnic Institute; Wei Cheng, NEC Laboratories America, Inc.; Wu Yue, Princeton University; Masafumi Oyamada, NEC Corporation; Mengdi Wang, Princeton University; Santiago Paternain, Rensselaer Polytechnic Institute; Haifeng Chen, NEC Laboratories America, Inc.;

Location: Exhibit Hall C,D,E #511

Date and Time: Friday, December 5th from 4:30 p.m. PST — 7:30 p.m. PST

Session: NEC TR #: 2025-TR087

Learn more: https://neurips.cc/virtual/2025/loc/san-diego/poster/116553

NEC Labs America: https://www.nec-labs.com/blog/disc-dynamic-decomposition-improves-llm-inference-scaling-dl4c/

TimeXL: Explainable Multi-modal Time Series Prediction with LLM-in-the-Loop

Poster Presentation

December 5, 2025

Wenchao Yu

Data Science & System Security

Abstract: Time series analysis provides essential insights for real-world system dynamics and informs downstream decision-making, yet most existing methods often overlook the rich contextual signals present in auxiliary modalities. To bridge this gap, we introduce TimeXL, a multi-modal prediction framework that integrates a prototype-based time series encoder with three collaborating Large Language Models (LLMs) to deliver more accurate predictions and interpretable explanations. First, a multi-modal prototype-based encoder processes both time series and textual inputs to generate preliminary forecasts alongside case-based rationales. These outputs then feed into a prediction LLM, which refines the forecasts by reasoning over the encoder’s predictions and explanations. Next, a reflection LLM compares the predicted values against the ground truth, identifying textual inconsistencies or noise. Guided by this feedback, a refinement LLM iteratively enhances text quality and triggers encoder retraining. This closed-loop workflow-prediction, critique (reflect), and refinement-continuously boosts the framework’s performance and interpretability. Empirical evaluations on four real-world datasets demonstrate that TimeXL achieves up to 8.9% improvement in AUC and produces human-centric, multi-modal explanations, highlighting the power of LLM-driven reasoning for time series prediction.

Authors: Yushan Jiang, University of Connecticut; Wenchao Yu, NEC Laboratories America; Geon Lee, KAIST; Dongjin Song, University of Connecticut & NEC Laboratories America; Kijung Shin, KAIST; Wei Cheng, NEC Laboratories America; Yanchi Liu, NEC Laboratories America; Haifeng Chen, NEC Laboratories America.

Location: Exhibit Hall C, D, E #2307

Date and Time: Friday, December 5th, from 11 a.m. — 2 p.m. PST

Session: NEC TR #: 2025-TR034

Learn more: https://neurips.cc/virtual/2025/loc/san-diego/poster/117594

Multi-Modal View Enhanced Large Vision Models for Long-Term Time Series Forecasting

Poster Presentation

December 5

Wenchao Yu

Data Science & System Security

Abstract: Time series, typically represented as numerical sequences, can also be transformed into images and texts, offering multi-modal views (MMVs) of the same underlying signal. These MMVs can reveal complementary patterns and enable the use of powerful pre-trained large models, such as large vision models (LVMs), for long-term time series forecasting (LTSF). However, as we identified in this work, the state-of-the-art (SOTA) LVM-based forecaster poses an inductive bias towards “forecasting periods”. To harness this bias, we propose DMMV, a novel decomposition-based multi-modal view framework that leverages trend-seasonal decomposition and a novel backcast-residual based adaptive decomposition to integrate MMVs for LTSF. Comparative evaluations against 14 SOTA models across diverse datasets show that DMMV outperforms single-view and existing multi-modal baselines, achieving the best mean squared error (MSE) on 6 out of 8 benchmark datasets.

The code for this paper is available at: https://github.com/D2I-Group/dmmv

Authors: ChengAo Shen, University of Houston; Wenchao Yu, NEC Laboratories America; Ziming Zhao, University of Houston; Dongjin Song, University of Connecticut; Wei Cheng, NEC Laboratories America; Haifeng Chen, NEC Laboratories America; Jingchao Ni, University of Houston.

Location: Exhibit Hall C,D,E #2309

Date and Time: Friday, December 5th from 11 a.m. — 2 p.m. PST

Session: NEC TR #: 2025-TR130

Learn more: https://neurips.cc/virtual/2025/loc/san-diego/poster/118223